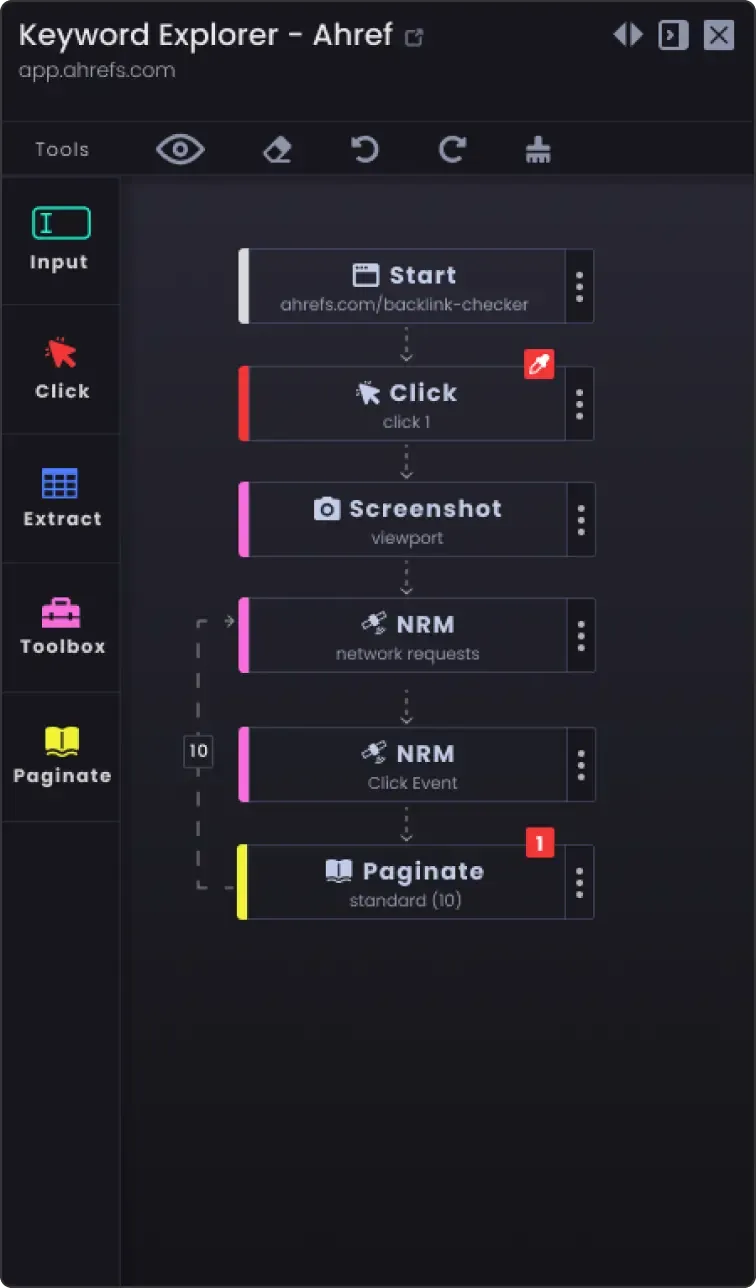

Using Wikipedia data can be very useful for your projects, research, or business. With over 6,942,000 articles in English and millions more across various languages, Wikipedia offers a vast collection of information. It's a great resource for gathering information on virtually any topic you might be interested in. With Automatio.ai, you can easily automate the process of scraping and organizing data from Wikipedia. This no-code tool allows you to collect data without needing technical skills. You can get the information you need by simply selecting elements on the webpage using its visual interface.

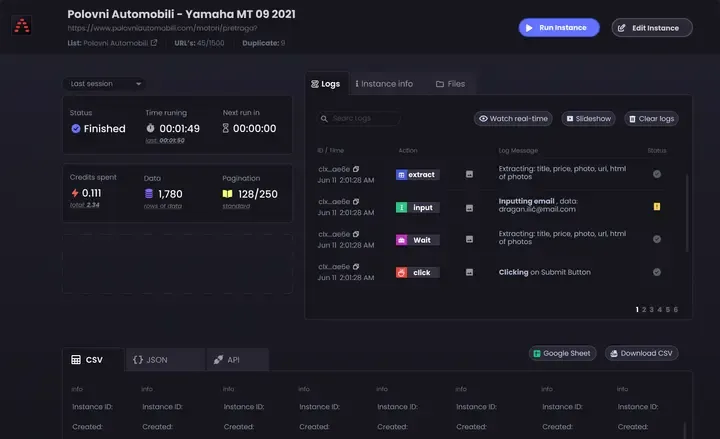

By using Automatio to access Wikipedia data, you can save time and effort. You can utilize this data to monitor trends, perform market analysis, or even gather content for educational purposes. Whether you need to compare historical facts, conduct language studies, or enhance your SEO strategies with insightful information, scraping Wikipedia could provide a valuable edge. Additionally, you can export the collected data in formats like CSV or JSON, or integrate directly into tools like Google Sheets, making it easy to use in your projects or research.